Introduction to Terminology around Attestation in Trusted and Confidential Computing

In my master's thesis, I compiled a glossary of terms related to attestation, with a focus on Trusted and Confidential Computing. Although much of the literature and projects predate the development of a common terminology, we now have definitions for most concepts, allowing us to discuss them using precise terminology. This article adapts my original section to provide an overview of the key concepts, primarily based on RFC 9334[1]: Remote Attestation ProcedureS (RATS) Architecture, for which also exists a useful cheat sheet.

Root-of-Trust (RoT)

The TCG defines a RoT component as:

a component that performs one or more security-specific functions, such as measurement, storage, reporting, verification, and/or update. It is trusted always to behave in the expected manner, because its misbehavior cannot be detected (such as by measurement) under normal operation. [2]

This can range from a hardware module such as Trusted Platform Module (TPM)[3] to a certificate issued by a Public Key Infrastructure (PKI).

Attestation – Attester, Attestable, Claims and Evidence

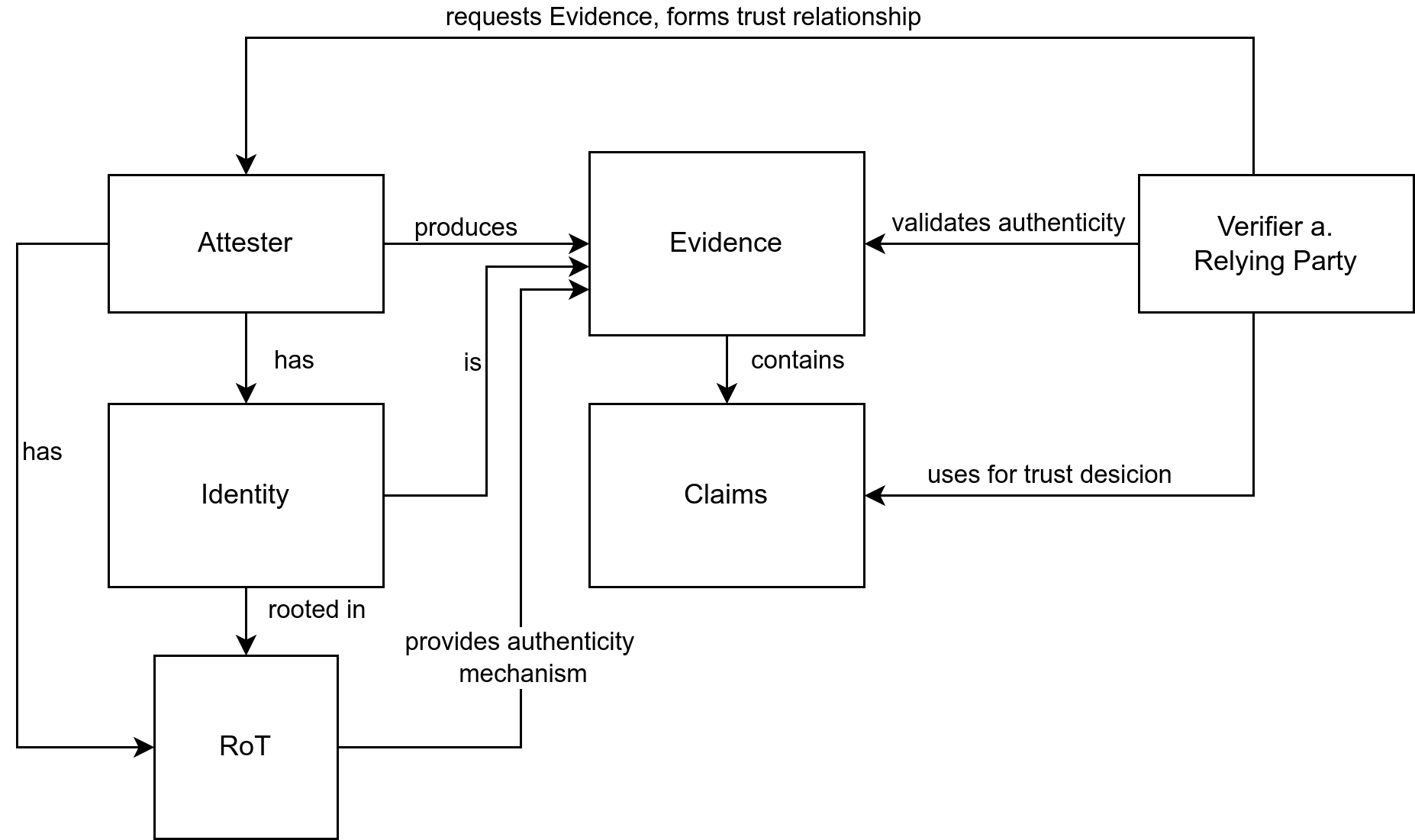

Attestation describes the entire process of establishing a trust relationship between two or more parties by using Evidence. We mainly follow the roles and artifacts defined in RFC 9334 Section 4[1:1] and the extensions proposed by Sardar et al.[4]. The figure below illustrates how the different terms are connected to each other.

Attester

RATS defines Attester as:

A role performed by an entity (typically a device) whose Evidence must

be appraised in order to infer the extent to which the Attester is considered trustworthy, such as when deciding whether it is authorized to perform some operation.[1:2] (Section 4.1)

We extend the definition by also including requiring that an Attester must be able to produce Evidence about an identity, which is unique, is protected against outsiders, can be used to prove their identity to third-parties, and can be used from third-parties to seal data for the Attester. The issue of identity was also considered in newer drafts to related RFCs, for example in RATS Endorsements[5] Section 4.

Claims and Evidence

RATS defines a Claim as:

A piece of asserted information, often in the form of a name/value pair. Claims make up the usual structure of Evidence and other RATS conceptual messages.[1:3] (Section 4.2)

and Evidence as:

A set of Claims generated by an Attester to be appraised by a Verifier. Evidence may include configuration data, measurements, telemetry, or inferences.[1:4] (Section 4.2)

We argue that Evidence also needs to include a mechanism to verify the authenticity the Evidence, specifically a way to validate that the Evidence was generated by the Attester (e.g. signature). Authenticity does not tell anything about the state of the Attester. For example, the Claims could show that the Attester was compromised, but the signature of the Evidence is still valid.

Local and Remote Attestation

We differentiate between Local and Remote Attestation,

in the same way Sardar, Fossati, and Frost do[4:1].

- Local: In this case the Attestation is done by the machine itself. This can be a check of a signature before a container is loaded into the runtime.

- Remote: In this case the Attestation is done by another party outside the machine. For example, this applies to a Verifier requesting Attestation from a different machine.

When talking about Attestation in the context of interaction between systems, we generally refer to Remote Attestation.

Verifier and Relaying Party

Let use use the same definitions as In RATS for the Verifier which is defined as

A role performed by an entity that appraises the validity of Evidence about an Attester and produces Attestation Results to be used by a Relying Party.[1:5] (Section 4.1)

and Relaying Party as

A role performed by an entity that depends on the validity of information about an Attester for purposes of reliably applying application-specific actions. [1:6] (Section 4.1)

Depending on the implementation, the Verifier and the Relaying Party are the same component. Which is often the case in real world implementations[6]. in This is described by Sardar, Fossati, and Frost as the Verifying Relying Party model[4:2]. Later we see how the Verifier can produce data, so that the Relaying Party can form a trust relationship with the Attester.

Authenticity, Trustworthiness, and Trust

With the Evidence we generally can easily validate its authenticity. This does not necessarily imply that the Evidence can be trusted.

Authenticity in the context of Evidence means that the attesting party is able to validate that the claims are actually coming from the Attester and were not manipulated. In many instances this also includes validating the identity of the Attester. For trust, we use the definition given by Bursell[7] based on work from Gambetta[8], where the latter was the first big look at trust trust in socicial sciences:

Trust is the assurance that one entity holds that another will perform

particular actions according to a specific expectation. […]

- First Corollary: Trust is always contextual

- Second Corollary: One of the contexts for trust is always time

- Third Corollary: Trust relationships are not symmetrical

[7:1] (p. 5)

A simple example even without technology for the first and third corollary is the relationship between you and your bank. You would only trust them with your money, not with health matters. Furthermore, just because you trust the bank with your money does not mean that they trust you with theirs. For the second one just because something was true a decade ago, does not necessarly mean it applies today.

Sometimes, trustworthiness and trust are used interchangeably, but there is a distinct difference. We argue that trustworthiness is the information, properties and context of a component that are used to conclude that the component is trustworthy. Which is similar to the ideas proposed by O’Doherty[9] and Cheshire[10] in the field of ontology. We can take the authenticity of the Evidence as an example for what contributes to the trustworthiness, but does not make the component trustworthy.

Trust in comparison is the decision and relationship that is established between the Attester and the other party based on the Evidence. There can be different levels of trust and therefore multiple relationships between the same two components.

As Bursell stated, trustworthiness cannot be a global property of the Attester itself. If that were the case the trust relationship from two different parties to the same Attester would be the same, which is not always the case.

Integrity

For Integrity, we follow definition from United States Code[11] which is also used by FIPS 200:

integrity, which means guarding against improper information modifica

tion or destruction, and includes ensuring information nonrepudiation and authenticity.

Trusted Computing

Combining trust, trustworthiness, authenticity, and integrity we arrive at Trusted Computing. It describes the concept that an entity has some trust relationship with a computer system (or a part of it) that then behaves in an expected way[12]. How this relationship is formed, i.e via Remote Attestation and which parts of the system it covers depends on the entity, system, and environment. The TCG produces a set of standards to build systems that can leverage Trusted Computing.

Confidential Computing and Confidentiality

For the definition of Confidential Computing, we follow definition published by the Confidential Computing Consortium (CCC):

The Confidential Computing Consortium has defined Confidential Com-

puting as "the protection of data in use by performing computation in a

hardware-based, attested Trusted Execution Environment", and identified three primary attributes for what constitutes a Trusted Execution Environment: data integrity, data confidentiality, and code integrity. As described in "Confidential Computing: Hardware-Based Trusted Execution for Applications and Data", four additional attributes may be present (code confidentiality, programmability, recoverability, and attestability) but only attestability is strictly necessary for a computational environment to be classified as Confidential Computing.

We notice Confidential Computing includes the notion of integrity, but integrity is independent of confidentiality. Integrity is not required to establish trust into a component or to verify confidentiality, but it does protect the data against actors on the same system.

Henk Birkholz et al. Remote ATtestation procedureS (RATS) Architecture. Request for Comments RFC 9334. Internet Engineering Task Force, Jan. 2023: https://datatracker.ietf.org/doc/rfc9334 ↩︎ ↩︎ ↩︎ ↩︎ ↩︎ ↩︎ ↩︎

Trusted Computing Group. TCG Glossary. May 11, 2017. url: https://trustedcomputinggroup.org/wp-content/uploads/TCG-Glossary-V1.1-Rev-1.0.pdf ↩︎

Trusted Computing Group. Trusted Platform Module Library Family“2.0”Level 00 Revision 01.59. Trusted Computing Group, Nov. 8, 2019. url: https://trustedcomputinggroup.org/wp-content/uploads/TCG_TPM2_r1p59_Part1_Architecture_pub.pdf ↩︎

Muhammad Sardar, Thomas Fossati, and Simon Frost. SoK: Attestation in Confidential Computing. Jan. 20, 2023. preprint. https://www.researchgate.net/publication/367284929_SoK_Attestation_in_Confidential_Computing ↩︎ ↩︎ ↩︎

Dave Thaler, Henk Birkholz, and Thomas Fossati. RATS Endorsements. Internet

Draft draft-ietf-rats-endorsements-07. Internet Engineering Task Force, Aug. 25, 2025. https://datatracker.ietf.org/doc/draft-ietf-rats-endorsements/ ↩︎Harsh Vardhan Mahawar. Harmonizing Open-Source Remote Attestation: My LFX Mentorship Journey. Confidential Computing Consortium Blog, Jul. 29, 2025. https://confidentialcomputing.io/2025/07/29/harmonizing-open-source-remote-attestation-my-lfx-mentorship-journey/ ↩︎

Mike Bursell. Trust in Computer Systems and the Cloud. John Wiley & Sons, Oct. 25, 2021. isbn: 978-1-119-69231-7. ↩︎ ↩︎

Diego Gambetta. “Can We Trust Trust?” In: Trust: Making and Breaking Cooperative Relations. Basil Blackwell, 1988, pp. 213–238. isbn: 0-631-15506-6. ↩︎

Kieran C. O’Doherty. “Trust, Trustworthiness, and Relationships: Ontological Reflections on Public Trust in Science”. In: Journal of Responsible Innovation 10.1 (Jan. 2, 2023), p. 2091311. issn: 2329-9460. doi: 10.1080/23299460.2022.2091311. ↩︎

Coye Cheshire. “Online Trust, Trustworthiness, or Assurance?” In: Daedalus 140.4 (Oct. 2011), pp. 49–58. issn: 0011-5266, 1548-6192. doi: 10.1162/DAED_a_00114. ↩︎

United States Code, 2006 Edition, Supplement 2, Title 44 - PUBLIC PRINTING AND DOCUMENTS. Jan. 5, 2009. ↩︎

Chris Mitchell and Institution of Electrical Engineers, eds. Trusted Computing. IEE Professional Applications of Computing Series 6. London: Institution of Electrical Engineers, 2005. 313 pp. isbn: 978-0-86341-525-8. ↩︎